Since 13 juli in the Netherlands the battle between the long expected iPad and several e-readers has started. But what are actually the downsides of the iPad as an e-reader or the downsides of an e-reader as an iPad? Let’s find out!

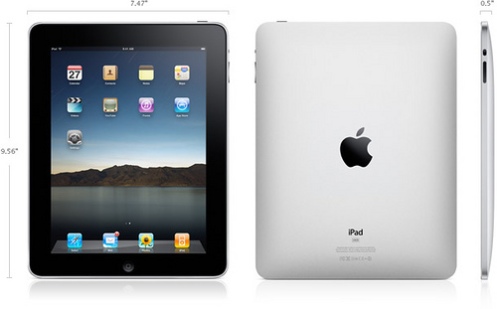

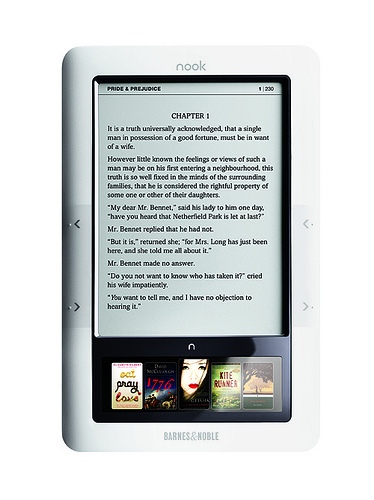

A lot of analyst forecast the launch of the iPad to be devastating for the e-reader marked although e-reader purists do not agree. One big difference between the iPad and an e-reader is the screen. The first uses, according to Apple, the more advanced screentechnology IPS (In-Plane Switching). This technology which originated from the research efforts from Hitachi in ‘96 is known for it’s wide angle view. Several years and generations later, this screen technology became affordable for application in the iPad and gives the user a view angle of 178 degrees. Ofcourse the beter view angle was obligatory since iPad applications are designed for landscape and perpendicular view. The big difference is in fact that most e-readers uses the (still) grayscale electronic ink technology which according to the advocates is easier on the eyes for heavy readers, consumes less battery and keeps the e-reader device light weight. Another difference of the screens is the mirroring and blurring effect of the iPad screen most noticeable when using outside. The most e-ink technology doesn’t have this negative effect from the sun if used in combination with a non mirroring screen surface. The screen of the Nook for instance doesn’t need backlight and uses the exterior light that fals on the screen. It acts simular as a normal piece of paper from which you can read by using the light from the environment. The only downside of the e-ink screens is the lack of color in the e-readers currently sold. E-ink color screens are promised by the manufacturer E Ink to be available in the year 2011.

There are some doubts about the e-ink technology which is known for the slow refresh rate. Although this is also an advantage in comparising with the LCD-screens who are refreshing at the rate of 60 screens per second and therefore are heavy on the eyes, the refresh rate is limiting the functionalities of the e-readers. The slow refresh rates of the e-ink technology is unsuitable for video or internet usage. And there is actually the biggest difference between the e-readers and the iPad. Both devices are different in that they are better used for differend purposes. For instance the iPad’s weight is significant and therefore not really suitable for reading. On the other hand you might say; ‘those big books are read aswell right’ in which you are definitely right but not for eight hours in line. Also the size of the iPad is for some a little bit to big for convenient portability in stead of the Nook who has the size of a paperback and is light weight also. Next to these benefits for the e-reader the battery life is also ten times longer than of the iPad’s which is due to the steady images the e-ink technology screens produces. The iPad can be used for ten hours straight when used as e-reader which is a reasonable timespan for a LCD screens of that size in a mobile device. The e-reader is said to have a battery life of one month which makes himself a good companion during a trip with a steamboot where the iPad will get you through a jetflight without getting bored. In practice the shorter battery life of the iPad will not be a big problem because of the availability of electric outlets in most situations.

eBook shops

In e-reader land there were strong relations between e-readers and ebooks. This is manly because the companies try to bond the consumers witth their ebook online store. For the iPad this means that the consumer was dependent on the offer of the iBooks online ebook store which a while ago set an agreement with the dutch Centraal Boekhuis to sell a lot more dutch subtitles. This means that the total offer of dutch booktitles has increased to 250.000 titles from september this year. Furthermore with the iPad it is possible to use different applications which gives you the apportunity to read ebooks from Nook and Kindle which have 1 million ebooks in catalog respectively 700.000. While these catalogs are typicaly based on english readings, for the more international orientated dutch reader this won’t be a problem. By the way, the prices of the ebooks in different online stores are on average the same.

Subscription

In case you want to use your iPad in combination with internet you need to subscribe to an 3G wirelessnetwork. It is possible to use the Wifi internetacces in stead but this won’t give you the full freedom of internet usage while travelling. A subscription will cost you in the Netherlands between the 20 and 30 euro’s on a monthly basis and depends on the amount of time you spend on the internet or the amount of data you need for your online activities. The Kindle e-reader is also available for dutch consumers where the a 3G wireless subscription is included in the purchase. The e-ink screens on the Kindle seems to be upgraded with a better contrast and refresh rates but the online experiences between the webbrowsers of the iPad and the Kindle will be substantial keeping in mind the still ’slow’ refresh in comparison with iPad’s LCD screen. The Nook also comes with 3G wireless internetacces but is still not available in europe.

Conclusion

The differences between de iPad and the e-readers mentioned are mainly due to the defining factor of e-readers, the e-ink screens. Although the screens will be continued to developed the functionalities of the e-ink technology is less than that of an LCD screen. Besides, the e-ink screens are relative expensive to produce in comparison with the LCD screens. An iPad used as an e-reader is actually not the most preferred thing to do. The device is way a head of the e-reader when it comes down to surfing the internet, which according to many is where the iPad really delivers what is promised. Instead of using a laptop to surf the internet which arguably gives you the same experience the iPad has the advantage of the size and the weight in comparison with a laptop. Besides that the many applications Apple has developed for the iPad are a big incentive of usage. The intuitive usage of the iPad also helps to make the device popular amongst a broad range of users. Then the price of the iPad from (499 dollar) are higher than for for instance the Amazon Kindle3 which is priced for 139 euro (Wifi-model).

What we can conclude is that the iPad isn’t actually a e-reader at all and an e-reader isn’t suitable for surfing the web. So in this case we are comparising apples with oranges. What can be said is that for the die hard bookreader an e-reader is definitely a device to take in consideration if he is looking for an alternatieve for the paper book because the reading of an e-book from e-ink screens can be called excellent. But for the consumer who will sometime read an ebook or digital newspaper is definitely better of with the iPad because the reading of an e-book from the LCD screen is reasonable enough. I believe the ease of the iPad is way better than the interface of most e-readers mostly because of the touchscreen of the iPad which is still not available for instance on the Kindle3. Also I think the value of the iPad lies in the ‘gadget’ value which isn’t present in most e-readers who’s look and feel is more plastic, less solid. So for the average consumer with enough cash in the pocket and is willing to pay on a montly basis for the internet access of the iPad, the iPad will give more value on the long term.